(Version originale en français)

I very frequently compile Linux kernels, often during training sessions or engineering services (mainly in the field of embedded systems or drivers development), sometimes while writing articles or books.

Compilation time varies greatly depending on the amount of code (drivers, filesystems, protocols, etc.) and on the CPU power of the host machine. On a mid-range PC, compiling a kernel adjusted for an embedded system (with very few drivers) lasts about three minutes. On an entry level machine (or a little old one), compiling a generic kernel for PC (with hundreds of drivers as modules) can last an hour.

To take advantage of the parallelism offered by the current processors (multiprocessor, multicore or hyper-threading), the make command allows us to run multiple jobs. So

$ make -j 4

guarantees there is always four compilation jobs active.

I have long reiterated that « if you have N processors (or cores, or virtual CPUs) available, you will save time by starting 2N compilation jobs in parallel« . The idea is that for every proccessor we have a job that performs the compilation (consuming CPU time) while another job is saving the results of the previous compilation and loading the source file of the next task. But wait… is this true?

Test script

I wrote the following script which downloads and unpack the required kernel sources, then makes several compilations using a variable number of jobs. For example, if we start

$ ./test-make-j.sh 3 5 8

It performs three complete compilations: one with three tasks in parallel, one with the five jobs and the last with eight jobs. The results are recorded in a text file. The script follows.

test-make-j.sh #! /bin/sh KERNEL_VERSION="linux-3.2" KERNEL_URL_PATH="www.kernel.org/pub/linux/kernel/v3.0/" RESULT_FILE="compilation-timing.txt" if [ "$#" -eq 0 ] then echo "usage: $@ jobs_number..." >& 2 exit 0 fi if [ ! -d "${KERNEL_VERSION}" ] then if [ ! -f "${KERNEL_VERSION}.tar.bz2" ] then wget "${KERNEL_URL_PATH}/${KERNEL_VERSION}.tar.bz2" if [ $? -ne 0 ] || [ ! -f "${KERNEL_VERSION}.tar.bz2" ] then echo "unable to obtain ${KERNEL_VERSION} archive" >&2 exit 1 fi fi tar xjf "${KERNEL_VERSION}.tar.bz2" if [ $? -ne 0 ] then echo "Error while uncompressing kernel archive" >&2 exit 1 fi fi cd "${KERNEL_VERSION}" echo "# Timings of ${KERNEL_VERSION} compilations" >> "${RESULT_FILE}" nb_cpu=$(grep "^processor" /proc/cpuinfo | wc -l) echo "# Processors: ${nb_cpu}" >> "${RESULT_FILE}" affinity=$(taskset -p $$ | sed -e 's/^.*://') >> "${RESULT_FILE}" echo "# Affinity mask: ${affinity}" >> "${RESULT_FILE}" for nb in "$@" do echo "# Compiling with $nb simultaneous jobs" >> "${RESULT_FILE}" make mrproper make i386_defconfig sync sleep 10 # Let's all calm down start=$(date "+%s") make -j $nb sync end=$(date "+%s") # This script will fail during february 2038 ;-) echo "$nb $((end - start))" >> "${RESULT_FILE}" done

Results

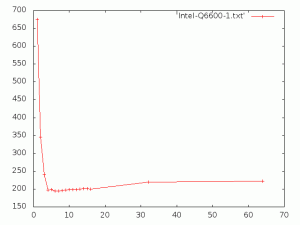

Here are the results of a run on an Intel Q6600 Quad-Core (file: Intel-Q6600-1.txt)

# Timings of linux-3.2 compilations # Processors: 4 # Affinity mask: f # Compiling with 1 simultaneous jobs 1 675 # Compiling with 2 simultaneous jobs 2 346 # Compiling with 3 simultaneous jobs 3 241 # Compiling with 4 simultaneous jobs 4 197 # Compiling with 5 simultaneous jobs 5 198 # Compiling with 6 simultaneous jobs 6 194 # Compiling with 7 simultaneous jobs 7 195 # Compiling with 8 simultaneous jobs 8 196 # Compiling with 9 simultaneous jobs 9 197 # Compiling with 10 simultaneous jobs 10 198 # Compiling with 11 simultaneous jobs 11 198 # Compiling with 12 simultaneous jobs 12 198 # Compiling with 13 simultaneous jobs 13 200 # Compiling with 14 simultaneous jobs 14 201 # Compiling with 15 simultaneous jobs 15 201 # Compiling with 16 simultaneous jobs 16 200

Let’s see them graphically with this little Gnuplot command line. On the horizontal axis, lies the number of concurrent jobs and on the vertical axis is the compilation time (in seconds).

$ echo "set terminal png size 640,480 ; set output './Intel-Q6600-1.png'; plot 'Intel-Q6600-1.txt' with linespoints" | gnuplot

Apparently, the best results are achieved (with some fluctuations) with make-j 4. Let’s try to confirm this. Before running again the script, we limit it to two processors with the following command which binds on processors 2 and 3 all the jobs launched from the running shell.

$ taskset -pc 2-3 $$

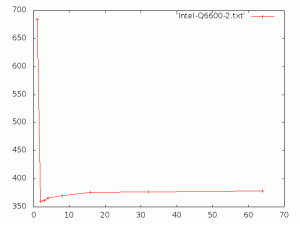

Here are the results (file: Intel-QL6600-2.txt).

# Timings of linux-3.2 compilations # Processors: 4 # Affinity mask: c # Compiling with 1 simultaneous jobs 1 684 # Compiling with 2 simultaneous jobs 2 360 # Compiling with 3 simultaneous jobs 3 362 # Compiling with 4 simultaneous jobs 4 366 # Compiling with 8 simultaneous jobs 8 370 # Compiling with 16 simultaneous jobs 16 376 # Compiling with 32 simultaneous jobs 32 377 # Compiling with 64 simultaneous jobs 64 378

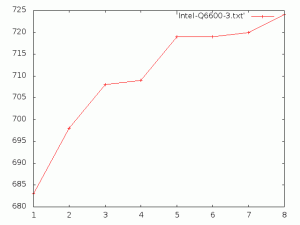

This time, it is clear that the minimum time is achieved with make-j 2. If we repeat the experiment on a single CPU, we obtain the following values (file: Intel-Q6600-3.txt).

# Timings of linux-3.2 compilations # Processors: 4 # Affinity mask: 8 # Compiling with 1 simultaneous jobs 1 683 # Compiling with 2 simultaneous jobs 2 698 # Compiling with 3 simultaneous jobs 3 708 # Compiling with 4 simultaneous jobs 4 709 # Compiling with 5 simultaneous jobs 5 719 # Compiling with 6 simultaneous jobs 6 719 # Compiling with 7 simultaneous jobs 7 720 # Compiling with 8 simultaneous jobs 8 724

Represented on the graph below:

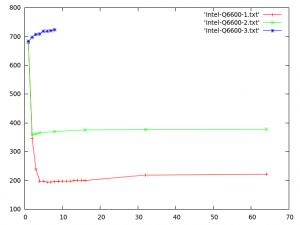

We can group these three curves on a single graph to better see their scales (I have not extended the curve of the compilation on a single CPU, but we can imagine that it continues with a slight increase).

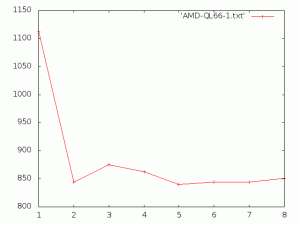

To be sure, we can repeat the experiment on a different processor with two cores (AMD QL66). The results are as follows (file: AMD-QL66-1.txt).

# Timings of linux-3.2 compilations # Processors: 2 # Affinity mask: 3 # Compiling with 1 simultaneous jobs 1 1113 # Compiling with 2 simultaneous jobs 2 844 # Compiling with 3 simultaneous jobs 3 875 # Compiling with 4 simultaneous jobs 4 863 # Compiling with 5 simultaneous jobs 5 840 # Compiling with 6 simultaneous jobs 6 844 # Compiling with 7 simultaneous jobs 7 844 # Compiling with 8 simultaneous jobs 8 851

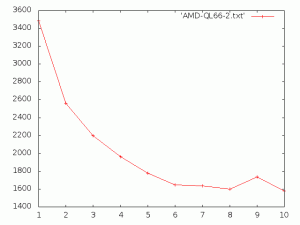

Let’s try one last experiment on the same machine (two CPU), by disabling two elements:

- prefetching of the next blocks of the disk (which can improve localized readings) with

echo 0 > /sys/block/sda/read_ahead_kb

- delayed (about 30 seconds) block writes (avoiding repetitive access to the disk in case of subsequent modification of the same block) with

mount / -o sync,remount.

This time the results are very different (file: AMD-QL66-2.txt). The times are much longer than before because for each write to disk, the process waits for data to be transmitted to the device to continue his work.

Timings of linux-3.2 compilations # Processors: 2 # Affinity mask: 3 # Compiling with 1 simultaneous jobs 1 3487 # Compiling with 2 simultaneous jobs 2 2562 # Compiling with 3 simultaneous jobs 3 2198 # Compiling with 4 simultaneous jobs 4 1963 # Compiling with 5 simultaneous jobs 5 1779 # Compiling with 6 simultaneous jobs 6 1646 # Compiling with 7 simultaneous jobs 7 1636 # Compiling with 8 simultaneous jobs 8 1602 # Compiling with 9 simultaneous jobs 9 1738 # Compiling with 10 simultaneous jobs 10 1577

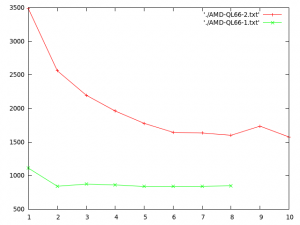

Here, the curve is closer to that than I imagined at first. Placing more jobs per CPU can take advantage of the wait times due to the disk access to progress in another compilation. Group the two curves in order to see the respective durations.

Conclusion

We see that with the quality of the I/O scheduler of Linux, and the optimized management of block devices, the best compilation time are obtained as soon as we launch one job per processor.

So I will modify my recommendation in the future as « If you have N processors available, compile your kernel with make -j N to get the best execution time. »

PS: If you have the opportunity to run this script on different architectures (8 processors, 16 processors, etc.). I am very interested in your results.

[…] To enable it, run – $ make -j n where n is the number of parallel jobs to execute. As per this analysis, the results are best when n = number of […]